Artificial intelligence, ethics and the world of talent

Contributors:

Kira Makagon

Chief Innovation Officer, RingCentral

Annie Hammer

Head of technology and analytics advisory Americas, AMS

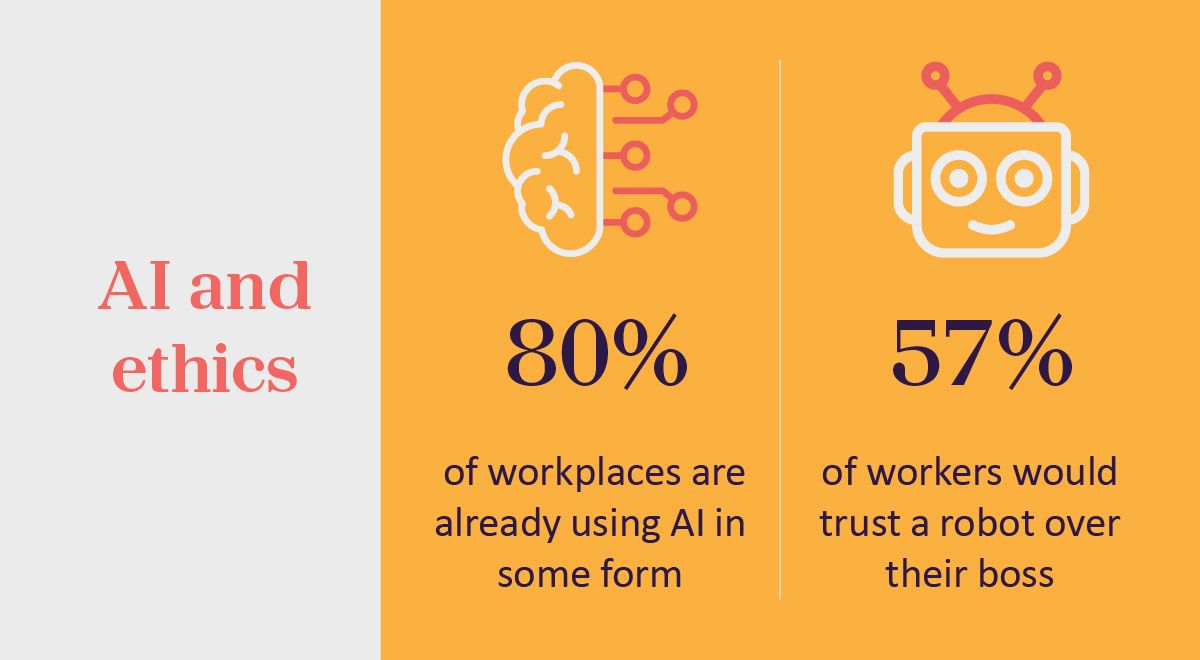

The emergence of AI has the potential to radically transform talent acquisition and retention. From enhanced efficiency and improved candidate matching to smoother application journeys and predictive culture fits, up to 80% of American workplaces are already using AI in some form for employment decision making, according to the Equal Employment Opportunity Commission.

However, the implementation of any new technology comes with potential downsides. The use of AI in talent acquisition poses several ethical challenges, particularly around issues of bias and discrimination. While AI aims to minimize biases, it can actually amplify existing ones if not calibrated and monitored correctly.

For example, AI systems that evaluate candidates’ facial expressions have been shown to prioritize male, white and able-bodied individuals, according to research by MIT and Stanford University. Startlingly, the study found that the facial recognition systems tested incorrectly assigned gender in more than a third (34.7%) of images of dark-skinned women.

Regulation is coming

Perhaps with such issues in mind, New York City became the first state to implement an AI hiring law, with its Automated Employment Decision Tool law coming into force in July 2023. The legislation forces employers to tell candidates when they are using AI in the hiring process as well as submit to annual audits examining the technology to ensure their systems are not discriminatory. Companies violating the rules face fines.

With further AI regulation in the pipeline, how can organizations create ethical, responsible AI systems that both future proof their workforces and stay on the right side of regulation?

“A lot of organizations that have been excited by AI are now having to grapple with regulation and understand how it affects their systems,” says Annie Hammer, head of technology and analytics advisory Americas at AMS.

“The big thing to understand is whether the technology you are using is actually AI in the first place. If it is, you need to consider the use case. Is it being used for automated decision-making or not? That’s the key issue,” she adds.

New York City’s law has been met with criticism from all sides. Some argue that it is hard to enforce and potentially excludes many uses of automated systems in hiring, while businesses argue that it is an unnecessary burden on the recruitment process.

Such is the uncertainty of its impact that many businesses are ‘waiting and watching’ on its impact before committing to further AI tools, says Hammer.

Meeting ethical challenges

At the heart of this is the need for businesses to stay up to date with technological advances and the impact artificial intelligence is having on their processes. Without adequate training, monitoring and process validation, companies open themselves up to both regulatory issues and to poor adoption of technology.

“We often see organizations that have implemented technology with AI capabilities over a year ago, but haven’t done any refresher training or updates. Not only do they have risk associated with this lack of training, but they also see falling adoption of the technology as they don’t adapt and develop their capabilities. There simply isn’t a maturity around training and governance with AI technology,” says Hammer.

Combating potential challenges around bias and discrimination requires a robust strategy examining the outcomes of technology usage. This might mean running parallel processes, with one group using AI technology and another not, to evaluate outcomes and how the tool is impacting decision-making.

It could also mean creating specific teams responsible for ethical regulation of AI usage in talent tools.

“We’re increasingly seeing new teams being set up to be responsible for hiring technology and their ethical use in business - groups like talent acquisition enablement, talent acquisition operations, talent acquisition innovation and solutions. Essentially, they are teams of business partners working across talent acquisition, legal and compliance, HR and IT to enable new ways of working in the recruitment function,” says Hammer.

The crux of the matter is that decision-making in talent acquisition must ultimately be made by a human. AI can aid the process and make the candidate journey easier, but it cannot be allowed to make the final decision. Organizations need to check that the recommendations technology is making are being challenged by their people, not just waved through.

People-first approach

This applies to other AI use cases at work. Kira Makagon is chief innovation officer at cloud communications platform RingCentral. She believes that businesses need to take a ‘people-first’ approach to the transformative potential of AI.

“In this digital age of communications and enhanced collaboration, artificial intelligence (AI) promises to be the driving force for most, if not all, of the transformation when it comes to ways of working. That promise, however, still depends on the millions of workers who will have an everyday experience with this new technology and therefore, workers must have a say in how it’s implemented and used,” says Makagon.

“Business leaders need to strike the right balance in a people-first approach to AI, as this is crucial to ensuring that the most efficient and functional foundations are laid for the smoothest adoption of AI. Humans make AI better and without their input, businesses will miss out on valuable insight that could determine how successful they are in the future,” she adds.

Hammer agrees that businesses need to look at the impact technology has on their people in a more concerted way. One way of doing this is to utilize AI to engage and develop existing employees.

“We focus a lot of content on external attraction, but AI can be better used to help existing employees find mobility opportunities and new roles. Another growing area is using AI to help guide employees on what skills they need to develop and what jobs they should take.

“A recent study on employee coaching found that some people actually trust AI more than their line managers when it comes to planning their next move,” adds Hammer.

AI regulation is set to grow globally and businesses need to constantly be aware of how changes affect their organization. Effective planning, people-led decision making and skills development are key to meeting this challenge.

Navigating Talent Technology at AMS

If you wish to stay ahead of the curve and be AI-ready be sure to keep regularly informed by visiting the new AMS Navigating Talent Technology resource where you will find up to date and relevant thought leadership focusing on the central role that AI and technology plays within the world of talent. Explore whitepapers and thought leadership articles and our recently launched Talent Technology Translator helping you to talk tech fluently and make informed talent decisions, faster.

written by the Catalyst Editorial Board

with contribution from:

Kira Makagon

Chief Innovation Officer, RingCentral

Annie Hammer

Head of technology and analytics advisory Americas, AMS